I’m writing a feature for my plugin that will have to loop through all nodes in the file and for that I want to create a progress bar that will be shown to the user so they know how much time the processing will approximately take. For that I need to estimate the size of the search tree.

At first I was trying to create an algorithm that simply found an average depth to the power of branching factor and random sampling, the variance was too big to be useful (from 100s to billions). Then I switched to finding a median of that value, it was better but still is pretty far from the reality. It overestimated a lot.

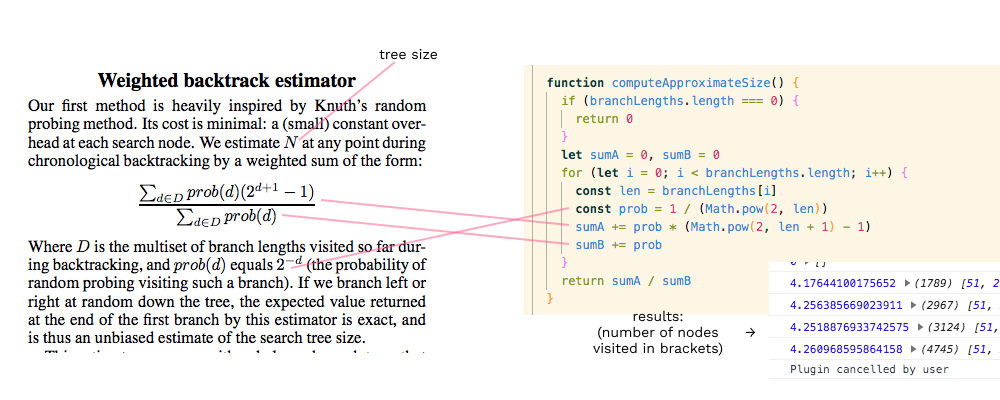

So I started googling and found this paper. After reading through it about 50 times and googling words such as backtracking, random probing, multiset, I got a rough idea of how it should work. Here is the algorithm that I have so far and the results it produces:

The results seem to be close to 4-5 depending on the file. Which looks more like simply a general branching factor than an estimated tree size. I’m probably doing something wrong here but I don’t understand what. But I somewhat understand the issue: it only takes branching factor into consideration, the depth is completely ignored.

Can anyone point me in the right direction and help me understand described algorithm better? Or maybe suggest a better one?

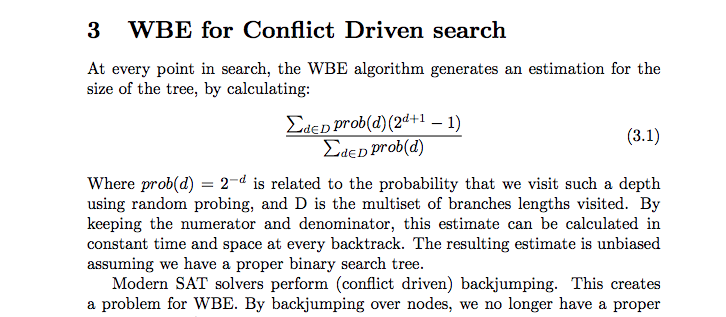

Update 1: found this article that shares the same formula. It gives me the idea that I need to calculate this for every node and store it but I don’t see any relationship between nodes in this formula. It seems to need only the multiset (array) of nodes branches lengths that I visited to work.

Update 2: Reading the above text more carefully “prob(d)… is related to the probability that we visit such a depth using random probing”. Which depth is it talking about? The depth at which we are calculating it? And how is it related? I’m so confused…